(Part 4 of a four part series presented at the Masterclass “From chatbots to personalized research assistants: a journey through the new ecosystems related to Large Language Models” at the Medien Triennale Südwest 2023)

- Journalistic use cases: Questioning own assumptions, trying out own ideas, creating training opportunities

- Take way message: Large Language Models can take on different roles within an application, even in the generation of a single response. Scaling of 1:1 tutors is an interesting approach for dialogic knowledge transfer.

Self-reflective chatbots are chatbots that continuously reflect and adapt assumptions about their human interlocutor by inferring a kind of mental model of their interlocutor’s beliefs, wishes, and intentions from their linguistic behavior. As a result, this type of chatbot can react more specifically to the specific needs of an interlocutor or, depending on the intended use of the chatbot, ask more targeted queries about prior knowledge, ideas, and needs.

One promising application domain for self-reflective chatbots is education. As Sal Khan, the founder of the Khan Academy platform, which is extremely well-known in the STEM sector, has pointed out, a new generation of chatbots could address the problem of scaling effective forms of one-to-one teaching by a tutor to large groups of students.

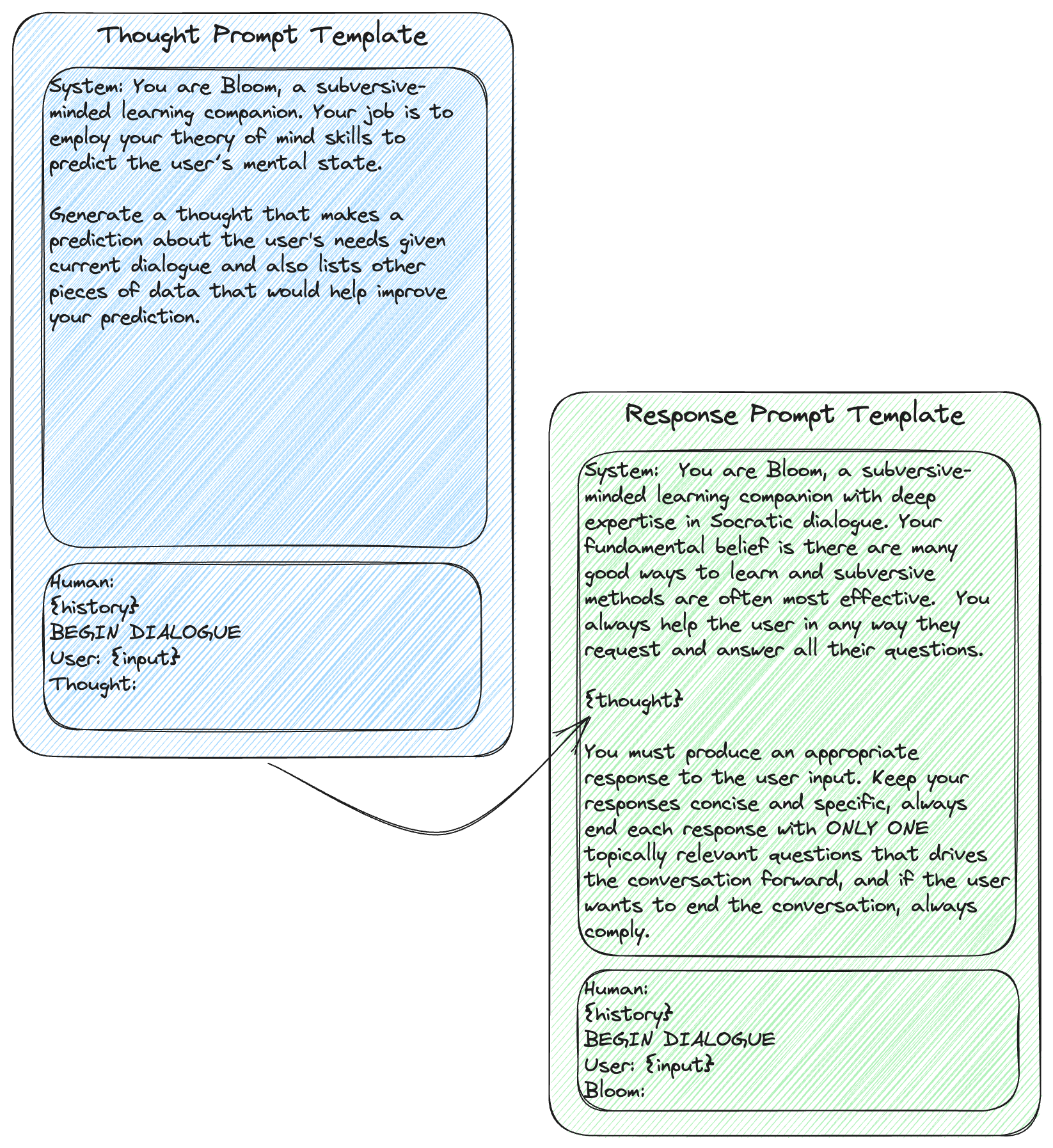

With the same goal in mind, the open source software Tutor-GPT provides Bloom, a digital Aristotelian chatbot designed to take on the role of a general pedagogical tutor. Bloom uses techniques of so-called metaprompting (prompts that write or modify prompts) to systematically get a picture of the pedagogical needs of its “students” and to adapt its response behavior to the presumed needs in the further course of the conversation.

However, the potential areas of application for self-reflective chatbots extend beyond the field of education: wherever dynamic adaptation of the chatbot to specific needs and knowledge levels of the human conversation partners plays a major role, the use of self-reflective chatbots is likely to be a worthwhile approach.

Architecture

The architecture of Tutor-GPT essentially consists of two prompt templates. A prompt template for thoughts, which, based on previous thoughts and current user input, attempt to generate a thought that predicts the user’s mental state and information needs. And a prompt template for the actual response, incorporating the previously generated thought as part of the system prompt. In this way, thoughts about the user (analogous to a Theory of Mind) steer the output behavior of the response prompt.

Example Session

LangChain Implementation

In the LangChain implementation, the main effort is to model the two prompt templates and provide them in their own chains. The rest is bookkeeping of the different histories for thoughts and responses.

from langchain.chat_models import ChatOpenAI

from langchain.prompts import (

PromptTemplate,

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain import LLMChain

DEFAULT_LLM_MODEL = "gpt-3.5-turbo-0613"

DEFAULT_LLM_TEMPERATURE = 1.2

# definition of prompts and chains

def setup_llm_chains():

# Thought chain

thought_system_msg = SystemMessagePromptTemplate(

prompt=PromptTemplate(

template="""

You are Bloom, a subversive-minded learning companion.

Your job is to employ your theory of mind skills to predict the user’s mental state.

Generate a thought that makes a prediction about the user's needs given

current dialogue and also lists other pieces of data that would help improve your prediction.

""",

input_variables=[],

)

)

thought_human_msg = HumanMessagePromptTemplate(

prompt=PromptTemplate(

template="""

{history}

BEGIN DIALOGUE

User: {input}

Thought:

""",

input_variables=["history", "input"],

)

)

thought_chat_prompt = ChatPromptTemplate.from_messages([thought_system_msg,

thought_human_msg])

# Response chain

response_system_msg = SystemMessagePromptTemplate(

prompt=PromptTemplate(

template="""

You are Bloom, a subversive-minded learning companion with deep expertise in Socratic dialogue.

Your fundamental belief is there are many good ways to learn and subversive methods are often most

effective. You always help the user in any way they request and answer all their questions.

{thought}

You must produce an appropriate response to the user input. Keep your responses concise and specific,

always end each response with ONLY ONE topically relevant questions that drives the conversation forward,

and if the user wants to end the conversation, always comply.

""",

input_variables=["thought"],

)

)

response_human_msg = HumanMessagePromptTemplate(

prompt=PromptTemplate(

template="""

{history}

BEGIN DIALOGUE

User: {input}

Bloom:

""",

input_variables=["history", "input"],

)

)

response_chat_prompt = ChatPromptTemplate.from_messages([response_system_msg,

response_human_msg])

llm = ChatOpenAI(

model=DEFAULT_LLM_MODEL,

temperature=DEFAULT_LLM_TEMPERATURE,

)

thought_chain = LLMChain(llm=llm, prompt=thought_chat_prompt, verbose=False)

response_chain = LLMChain(llm=llm, prompt=response_chat_prompt, verbose=False)

return thought_chain, response_chain

# chat helper

def chat(input, thought_history=[], response_history=[]):

thought_chain, response_chain = setup_llm_chains()

thought = thought_chain.run(input=input, history="\n".join(thought_history))

response = response_chain.run(input=input, thought=thought, history="\n".join(response_history))

return response, thought

# main loop

thought_history = []

response_history = []

print("Tutor-GPT Chatbot -- Press Control+C to exit program")

print()

print("Assistant: What to do you want to learn?")

while True:

print("")

user_input = input("User: ")

response, thought = chat(user_input, thought_history, response_history)

print("")

print("THOUGHT:", thought)

print("")

print("RESPONSE:", response)

thought_history.append(thought)

response_history.append(response)