(Part 3 of a four part series presented at the Masterclass “From chatbots to personalized research assistants: a journey through the new ecosystems related to Large Language Models” at the Medien Triennale Südwest 2023)

- Journalistic use cases: Support for complex research tasks

- Take away message: Large Language Models can handle planning and result synthesis within an agent using different tools. Agents are still unreliable and should be replaced by suitable chains of Large Language Models for most productive applications. However, this may change relatively quickly.

The old dream of autonomous agents, of programs that can interact independently with a (virtual) world in order to tackle a wide variety of problems according to their own plans without outside help – this dream is being dreamed more frequently again since Large Language Models provide new means for realizing autonomous agents due to their flexibility and their tolerance of ambiguous situations and inputs.

In Autonomous LLM-Based Agents, a language model is first provided with an identity or role, a task with targets, and background information about the task. Provided with this information, the language model prompts itself in a defined way (auto prompting) to find a way to solve the task under the defined objectives. This basic architecture can be adapted and extended in several ways: linking multiple agents with different roles and functions; designing the memory that agents have; accessing external tools such as web searches, database access, programming environments or other programs.

Plan-and-Execute Agents

We will now look at an architecture for agents inspired by BabyAGI and the “Plan-and-Solve” paper, which has already been implemented in an experimental part of LangChain.

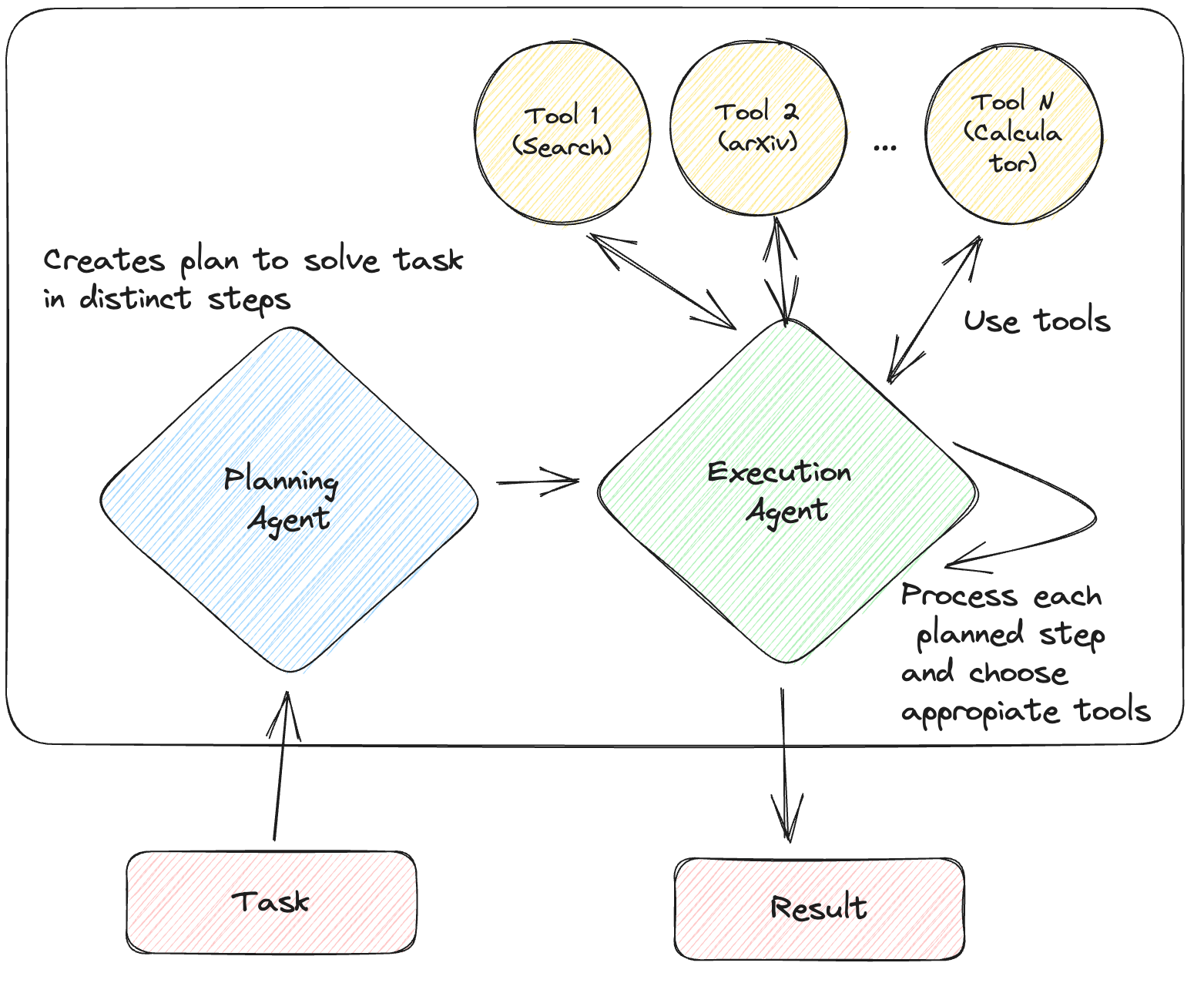

So-called plan-and-execute agents achieve a goal by first planning what to do and then executing the subtasks. Planning and execution are performed by two different agents. Planning is almost always done by a simple agent using a Large Language Model. Execution is usually done by a separate agent that has access to different defined tools.

Before we get into the details of this architecture and the execution steps of the agents, the following demo will give you a basic idea of how agents work. In the following example, the agent is started with a task to answer whether LK-99 is a superconductor. After creating a plan of what steps are needed to answer this question, these steps are processed by an agent that has access to a search engine and various other data sources (arXiv, PubMed, and Wikipedia).

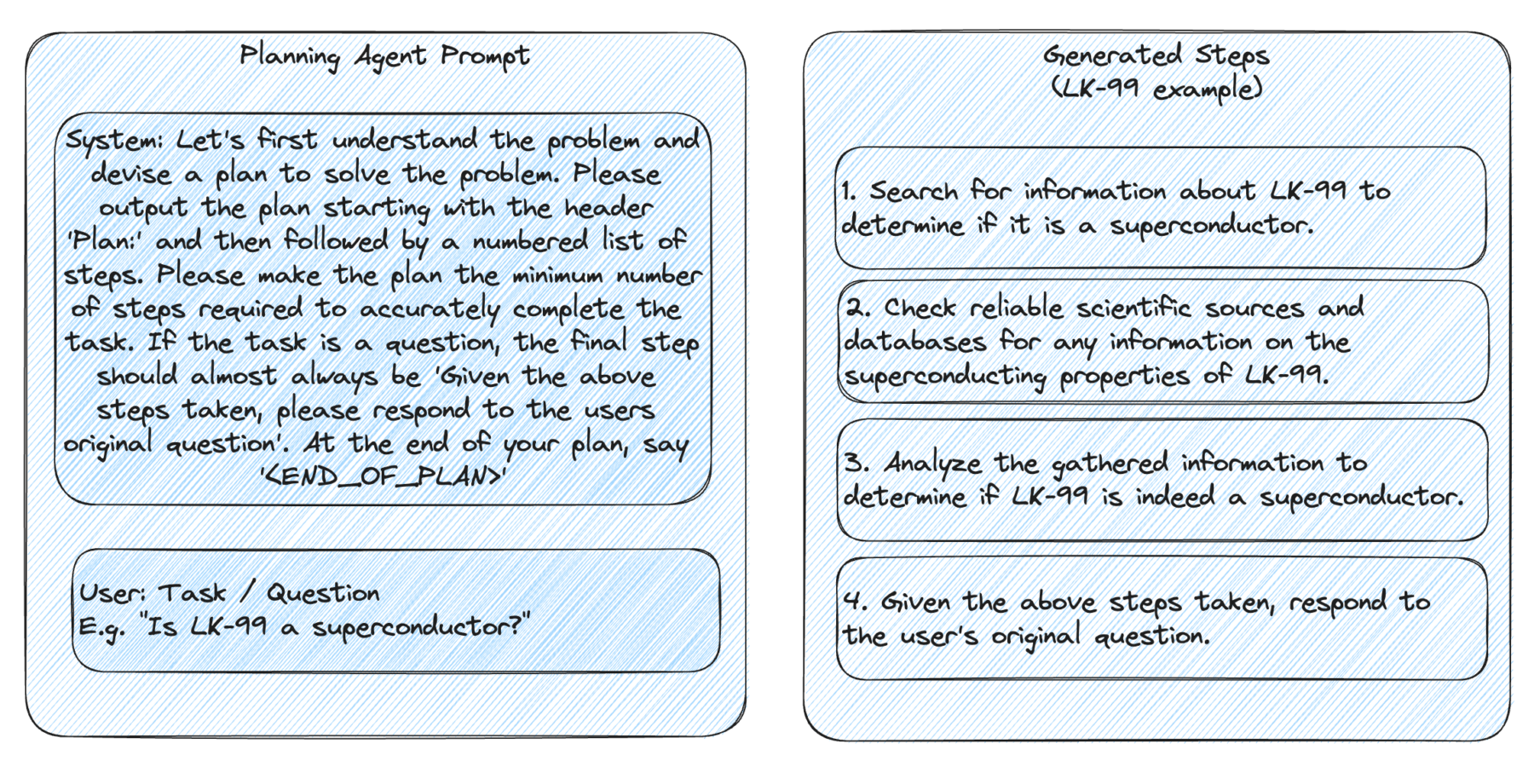

Planning Agent

The planning stage is quite simple: a system prompt is used to specify the task of generating the shortest possible list of individual steps for a given task. Finally, the user part of the prompt describes the task to be solved, e.g. “Is LK-99 a superconductor?”

The response generated by the language model is converted into a data structure in which the individual steps are separate elements. These elements are then passed step by step to the execution agent.

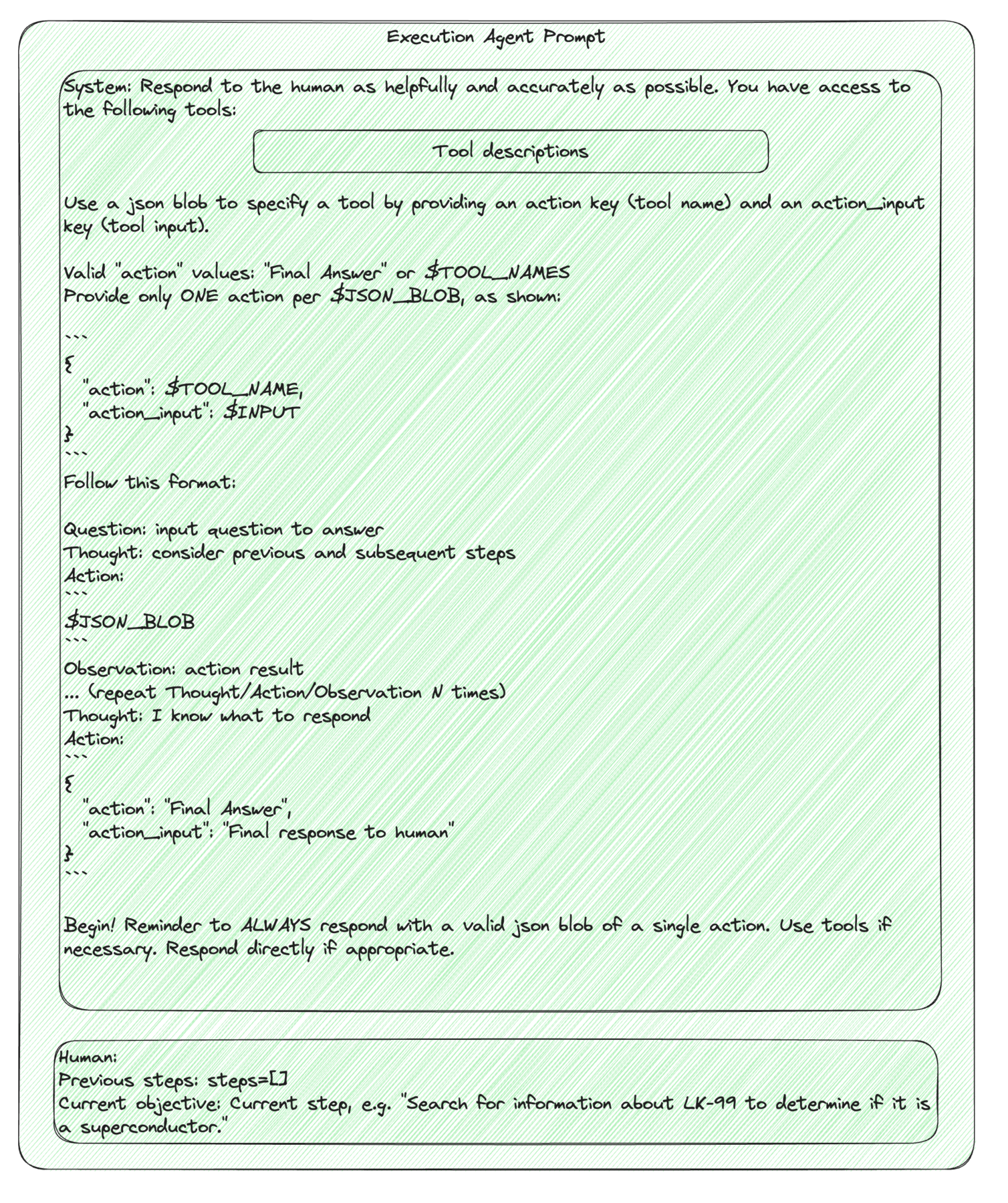

Execution Agent

The prompt for the Execution Agent, on the other hand, is quite complex. However, this complexity can be easily unravelled if we look at the prompt in its individual components.

The system prompt first describes the role and general task of the agent. This is followed by a description of the available tools (which we will look at in detail in a moment) and the desired way of using these tools by defining a specific format for calling them.

Then the so-called thought-action-observation loop is defined. Thoughts about the posed question lead to the selection of an action, which in turn leads to an observation, which triggers thoughts that select an action, … Until eventually a final answer is generated.

The user prompt contains a list of already processed steps and their answers and the current objective, i.e. the currently processed step.

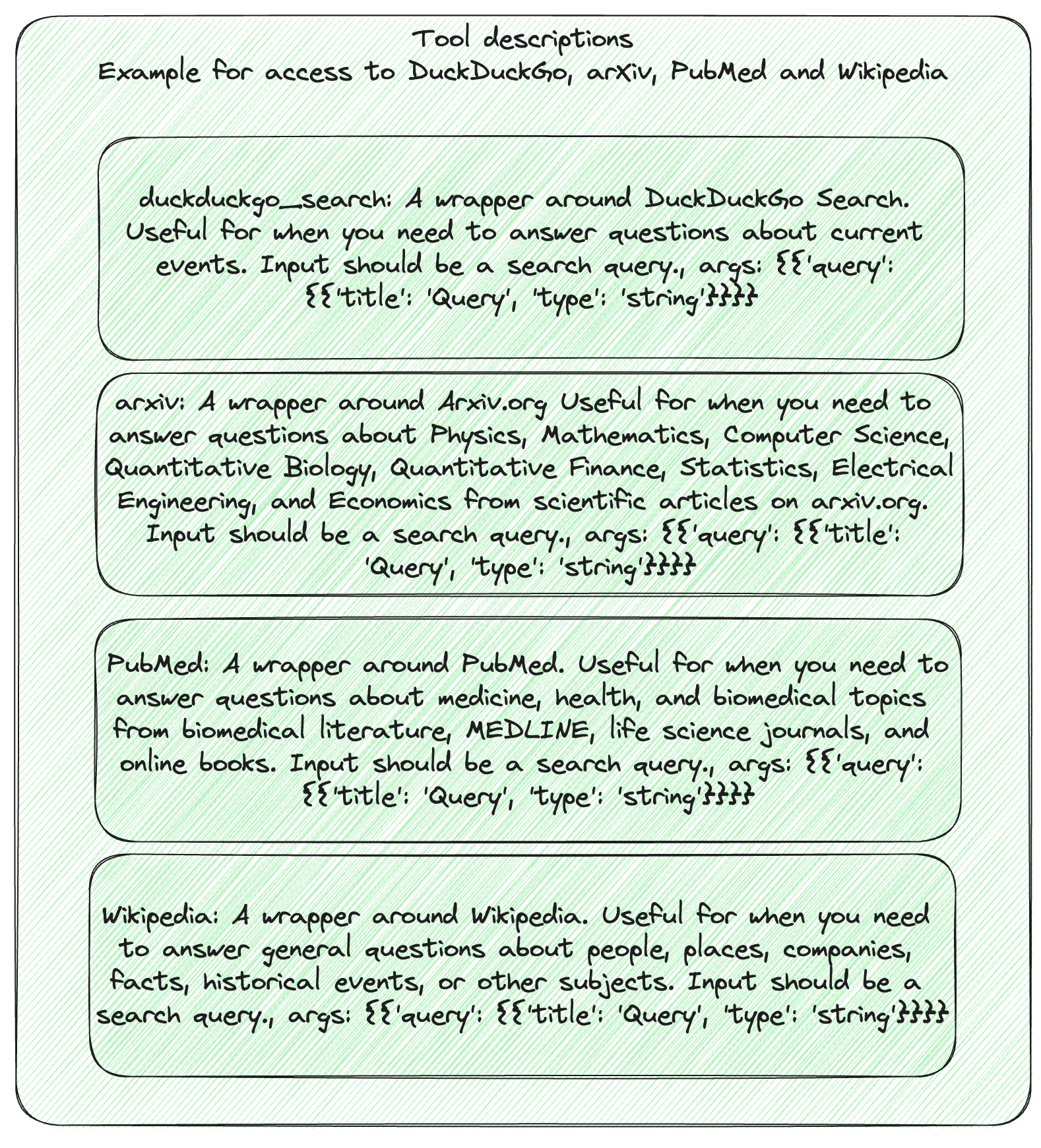

A tool description consists of a precise description of the intended use, including the questions and domains for which this tool can be used, and a specification of the arguments that a tool expects.

For example, the duckduckgo_search tool is described as “A wrapper around DuckDuckGo Search. Useful when you need to answer questions about current events. Input should be a search query.” And the format of the input is defined so that there must be a key “query” with a value of type string.

Execution steps

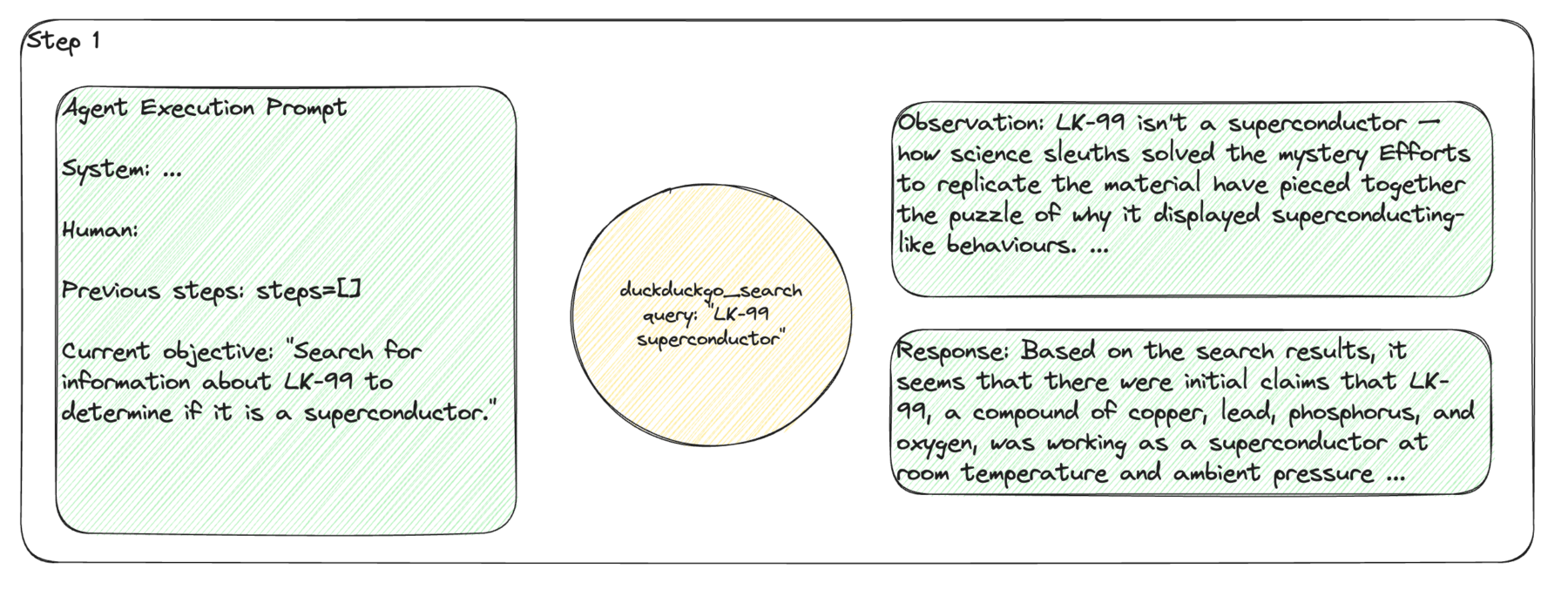

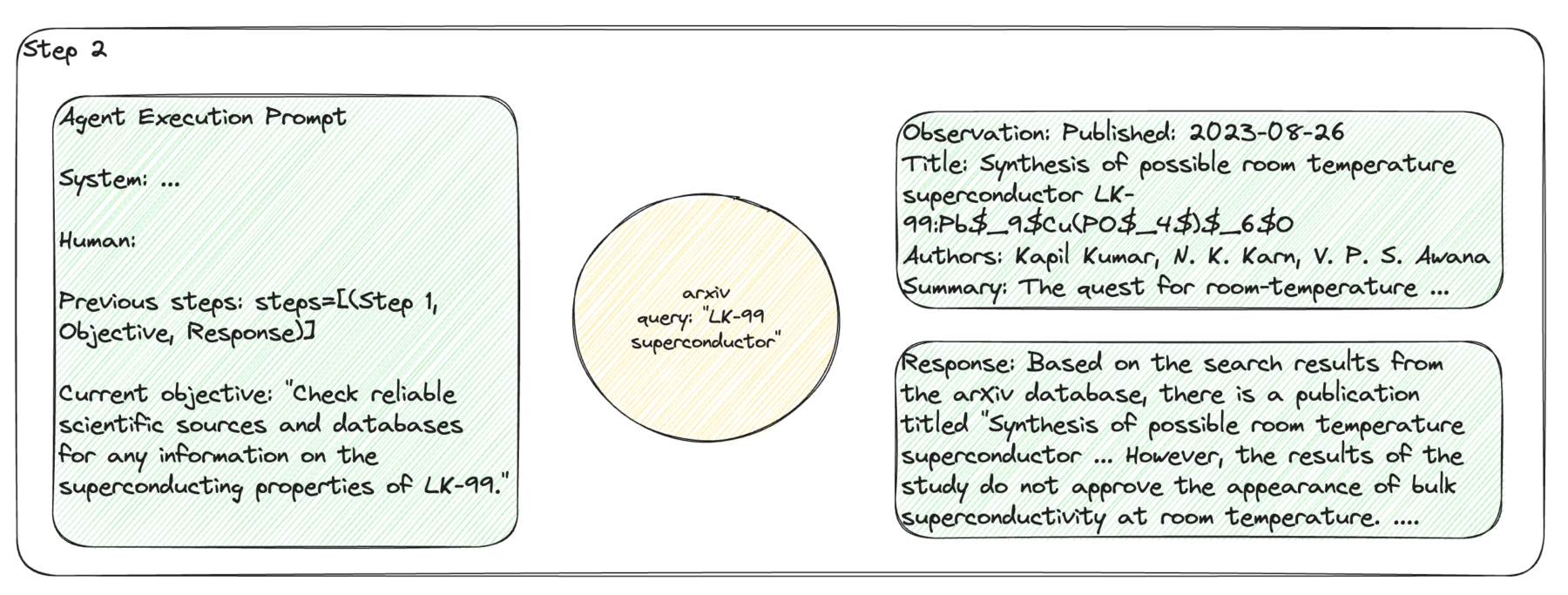

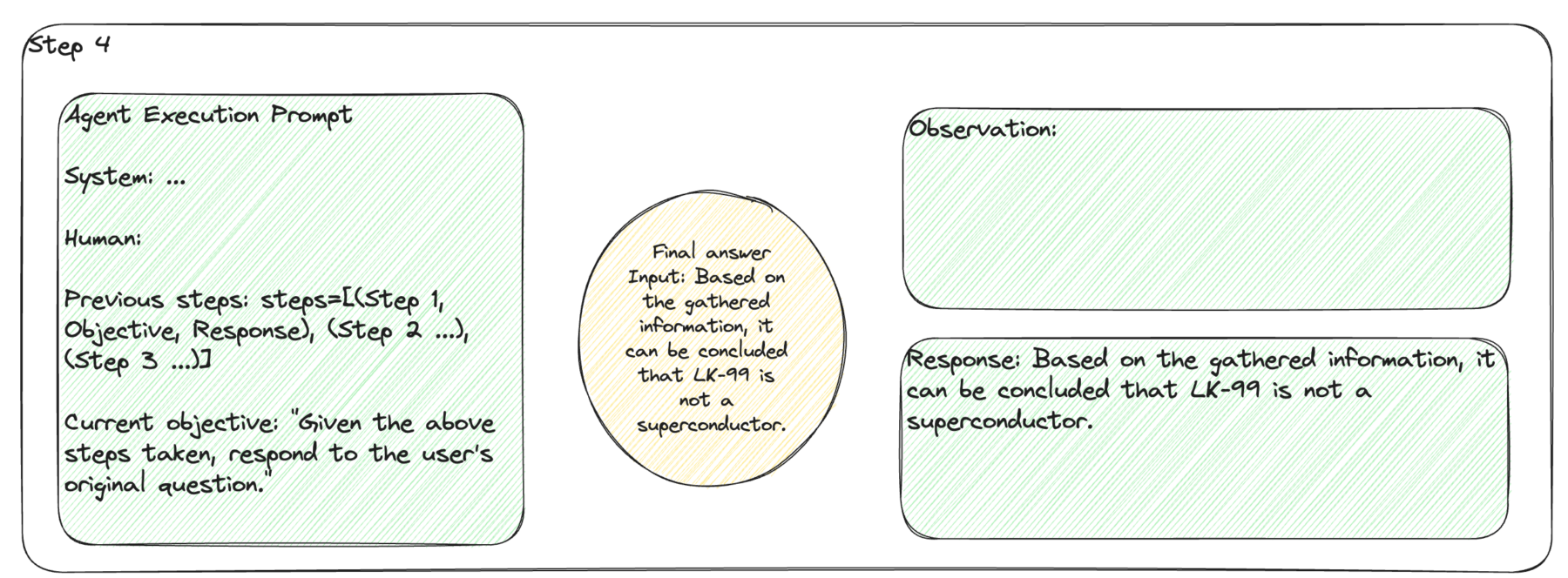

In an execution environment, the Execution Agent is executed for each planned step. The results of the previous steps and the current step are the input into the consideration of which action to execute. The results of the action are observed and combined into a response.

In the first step, a web search for “LK-99 superconductor” is performed to obtain up-to-date information on this issue.

With the knowledge from the web search and the specification of the second planned step to check reliable scientific sources and databases for any information on the superconducting properties of LK-99, a query to arXiv is generated in the second step.

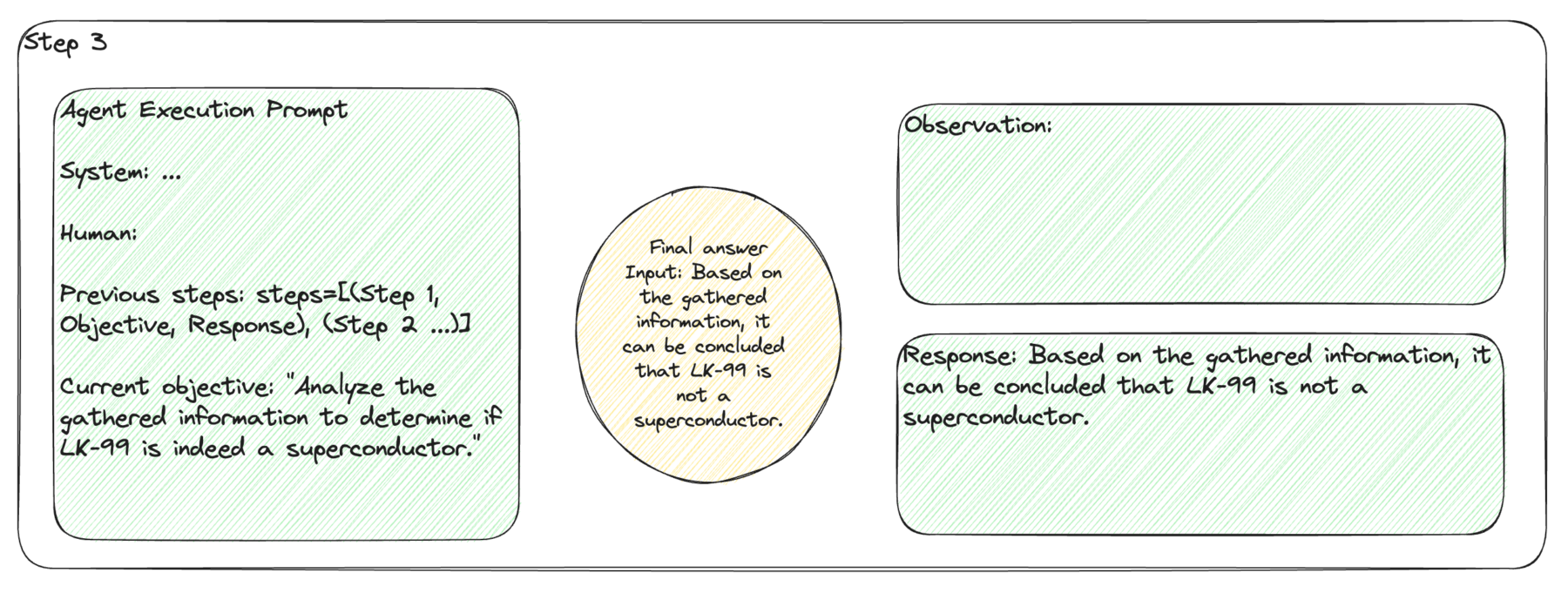

With the knowledge from the web search and the search in arXiv, the third planned step is processed: “Analyze the gathered information to determine if LK-99 is indeed a superconductor.” The agent does not see any further need for information that would have to be provided by a tool, and generates a final answer.

The final step uses all the information gathered in the previous steps to generate the final answer as response to the user.

Agents are fascinating because of their autonomous approach to problem solving. They try to move freely through problem spaces and gather relevant information. Sometimes they are successful in doing so. Sometimes they fail because they find the wrong sources or because they simply get caught in unproductive loops that do not advance the understanding of a problem. Autonomous LLM-based agents are still a nascent technology that is too unreliable for most production applications. However, this could change relatively quickly.

LangChain Implementation

Since there is an experimental implementation of Plan-and-Execute Agents in LangChain the code to set up the agent used in this example is very short.

from langchain.chat_models import ChatOpenAI

from langchain_experimental.plan_and_execute import PlanAndExecute,

load_agent_executor, load_chat_planner

from langchain.agents import load_tools

from langchain.callbacks import StdOutCallbackHandler

# this is an overly verbose implementation so that all intermediate steps and prompts are visible to the user

# (see the output panel after the code block for the full output)

# Large Language Model to use

llm = ChatOpenAI(temperature=0, verbose=True)

# Load predefined tools

tools = load_tools(["ddg-search", "arxiv", "pubmed", "wikipedia"])

# Create planner agent

planner = load_chat_planner(llm)

planner.llm_chain.verbose = True

# Create executor agent

executor = load_agent_executor(llm, tools, verbose=True)

# Stitch together Plan-And-Execute Agent

agent = PlanAndExecute(planner=planner, executor=executor, verbose=True)

# Define callbacks for extensive logging

callbacks = [StdOutCallbackHandler()]

# Run agent with task

agent.run("Is LK-99 a superconductor?", callbacks=callbacks)Output

> Entering new PlanAndExecute chain... > Entering new LLMChain chain... Prompt after formatting: System: Let's first understand the problem and devise a plan to solve the problem. Please output the plan starting with the header 'Plan:' and then followed by a numbered list of steps. Please make the plan the minimum number of steps required to accurately complete the task. If the task is a question, the final step should almost always be 'Given the above steps taken, please respond to the users original question'. At the end of your plan, say '<END_OF_PLAN>' Human: Is LK-99 a superconductor? > Finished chain. steps=[Step(value='Search for information about LK-99 to determine if it is a superconductor.'), Step(value='Check reliable scientific sources and databases for any information on the superconducting properties of LK-99.'), Step(value='Analyze the gathered information to determine if LK-99 is indeed a superconductor.'), Step(value="Given the above steps taken, respond to the user's original question. \n")] > Entering new AgentExecutor chain... > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[] Current objective: value='Search for information about LK-99 to determine if it is a superconductor.' > Finished chain. Action: ``` { "action": "duckduckgo_search", "action_input": { "query": "LK-99 superconductor" } } ``` Observation: LK-99 isn't a superconductor — how science sleuths solved the mystery Efforts to replicate the material have pieced together the puzzle of why it displayed superconducting-like behaviours. Dan... 08/23/23 In Nature and in a published preprint, Illinois professor Prashant Jain has explained "an important confounding effect" that helps unravel the mystery surrounding recent reports that LK-99, a compound of copper, lead, phosphorus and oxygen, was working like a superconductor at room temperature and ambient pressure. Party like it's LK-99. Claims of a room-temperature, ambient-pressure superconductor recently kicked up a storm on social media. As the dust settles, we take stock of what this experience can ... The past few weeks have seen a huge surge of interest among scientists and the public in a material called LK-99 after it was claimed to be a superconductor at room temperature and ambient... Some scientists expressed serious doubts about the LK-99 superconductor but, because of its potential to transform life as we know it, the concept has become an obsession with everyone from... Thought: > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[] Current objective: value='Search for information about LK-99 to determine if it is a superconductor.' This was your previous work (but I haven't seen any of it! I only see what you return as final answer): Action: ``` { "action": "duckduckgo_search", "action_input": { "query": "LK-99 superconductor" } } ``` Observation: LK-99 isn't a superconductor — how science sleuths solved the mystery Efforts to replicate the material have pieced together the puzzle of why it displayed superconducting-like behaviours. Dan... 08/23/23 In Nature and in a published preprint, Illinois professor Prashant Jain has explained "an important confounding effect" that helps unravel the mystery surrounding recent reports that LK-99, a compound of copper, lead, phosphorus and oxygen, was working like a superconductor at room temperature and ambient pressure. Party like it's LK-99. Claims of a room-temperature, ambient-pressure superconductor recently kicked up a storm on social media. As the dust settles, we take stock of what this experience can ... The past few weeks have seen a huge surge of interest among scientists and the public in a material called LK-99 after it was claimed to be a superconductor at room temperature and ambient... Some scientists expressed serious doubts about the LK-99 superconductor but, because of its potential to transform life as we know it, the concept has become an obsession with everyone from... Thought: > Finished chain. Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims. > Finished chain. ***** Step: Search for information about LK-99 to determine if it is a superconductor. Response: Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims. > Entering new AgentExecutor chain... > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[(Step(value='Search for information about LK-99 to determine if it is a superconductor.'), StepResponse(response='Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims.'))] Current objective: value='Check reliable scientific sources and databases for any information on the superconducting properties of LK-99.' > Finished chain. Action: ``` { "action": "arxiv", "action_input": { "query": "LK-99 superconductor" } } ``` Observation: Published: 2023-08-26 Title: Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O Authors: Kapil Kumar, N. K. Karn, V. P. S. Awana Summary: The quest for room-temperature superconductors has been teasing scientists and physicists, since its inception in 1911 itself. Several assertions have already been made about room temperature superconductivity but were never verified or reproduced across the labs. The cuprates were the earliest high transition temperature superconductors, and it seems that copper has done the magic once again. Last week, a Korean group synthesized a Lead Apatite-based compound LK-99, showing a T$_c$ of above 400$^\circ$K. The signatures of superconductivity in the compound are very promising, in terms of resistivity (R = 0) and diamagnetism at T$_c$. Although, the heat capacity (C$_p$) did not show the obvious transition at T$_c$. Inspired by the interesting claims of above room temperature superconductivity in LK-99, in this article, we report the synthesis of polycrystalline samples of LK-99, by following the same heat treatment as reported in [1,2] by the two-step precursor method. The phase is confirmed through X-ray diffraction (XRD) measurements, performed after each heat treatment. The room temperature diamagnetism is not evidenced by the levitation of a permanent magnet over the sample or vice versa. Further measurements for the confirmation of bulk superconductivity on variously synthesized samples are underway. Our results on the present LK-99 sample, being synthesized at 925$^\circ$C, as of now do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are though, yet underway. Published: 2015-09-17 Title: Superfluid density of a pseudogapped superconductor near SIT Authors: M. V. Feigel'man, L. B. Ioffe Summary: We analyze critical behavior of superfluid density $\rho_s$ in strongly disordered superconductors near superconductor-insulator transition and compare it with the behavior of the spectral gap $\Delta$ for collective excitations. We show that in contrast to conventional superconductors, the superconductors with preformed pairs display unusual scaling relation $\rho_s \propto \Delta^2$ close to superconductor-insulator transition. This relation have been reported in very recent experiments. Published: 2004-08-31 Title: Supercurrent in Nodal Superconductors Authors: Igor Khavkine, Hae-Young Kee, Kazumi Maki Summary: In recent years, a number of nodal superconductors have been identified; d-wave superconductors in high T_c cuprates, CeCoIn$_5$, and \kappa-(ET)_2Cu(NCS)_2, 2D f-wave superconductor in Sr_2RuO_4 and hybrid s+g-wave superconductor in YNi_2B_2C. In this work we conduct a theoretical study of nodal superconductors in the presence of supercurrent. For simplicity, we limit ourselves to d-wave and 2D f-wave superconductors. We compute the quasiparticle density of states and the temperature dependence of the depairing critical current in nodal superconductors, both of which are accessible experimentally. Thought: > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[(Step(value='Search for information about LK-99 to determine if it is a superconductor.'), StepResponse(response='Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims.'))] Current objective: value='Check reliable scientific sources and databases for any information on the superconducting properties of LK-99.' This was your previous work (but I haven't seen any of it! I only see what you return as final answer): Action: ``` { "action": "arxiv", "action_input": { "query": "LK-99 superconductor" } } ``` Observation: Published: 2023-08-26 Title: Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O Authors: Kapil Kumar, N. K. Karn, V. P. S. Awana Summary: The quest for room-temperature superconductors has been teasing scientists and physicists, since its inception in 1911 itself. Several assertions have already been made about room temperature superconductivity but were never verified or reproduced across the labs. The cuprates were the earliest high transition temperature superconductors, and it seems that copper has done the magic once again. Last week, a Korean group synthesized a Lead Apatite-based compound LK-99, showing a T$_c$ of above 400$^\circ$K. The signatures of superconductivity in the compound are very promising, in terms of resistivity (R = 0) and diamagnetism at T$_c$. Although, the heat capacity (C$_p$) did not show the obvious transition at T$_c$. Inspired by the interesting claims of above room temperature superconductivity in LK-99, in this article, we report the synthesis of polycrystalline samples of LK-99, by following the same heat treatment as reported in [1,2] by the two-step precursor method. The phase is confirmed through X-ray diffraction (XRD) measurements, performed after each heat treatment. The room temperature diamagnetism is not evidenced by the levitation of a permanent magnet over the sample or vice versa. Further measurements for the confirmation of bulk superconductivity on variously synthesized samples are underway. Our results on the present LK-99 sample, being synthesized at 925$^\circ$C, as of now do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are though, yet underway. Published: 2015-09-17 Title: Superfluid density of a pseudogapped superconductor near SIT Authors: M. V. Feigel'man, L. B. Ioffe Summary: We analyze critical behavior of superfluid density $\rho_s$ in strongly disordered superconductors near superconductor-insulator transition and compare it with the behavior of the spectral gap $\Delta$ for collective excitations. We show that in contrast to conventional superconductors, the superconductors with preformed pairs display unusual scaling relation $\rho_s \propto \Delta^2$ close to superconductor-insulator transition. This relation have been reported in very recent experiments. Published: 2004-08-31 Title: Supercurrent in Nodal Superconductors Authors: Igor Khavkine, Hae-Young Kee, Kazumi Maki Summary: In recent years, a number of nodal superconductors have been identified; d-wave superconductors in high T_c cuprates, CeCoIn$_5$, and \kappa-(ET)_2Cu(NCS)_2, 2D f-wave superconductor in Sr_2RuO_4 and hybrid s+g-wave superconductor in YNi_2B_2C. In this work we conduct a theoretical study of nodal superconductors in the presence of supercurrent. For simplicity, we limit ourselves to d-wave and 2D f-wave superconductors. We compute the quasiparticle density of states and the temperature dependence of the depairing critical current in nodal superconductors, both of which are accessible experimentally. Thought: > Finished chain. Based on the search results from the arXiv database, there is a publication titled "Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O" by Kapil Kumar, N. K. Karn, and V. P. S. Awana. The publication discusses the synthesis of polycrystalline samples of LK-99 and the investigation of its superconducting properties. However, the results of the study do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are still underway. There are also other publications related to superconductivity, but they do not specifically mention LK-99. One publication discusses the critical behavior of superfluid density in strongly disordered superconductors near the superconductor-insulator transition. Another publication focuses on the study of nodal superconductors in the presence of supercurrent. Based on these findings, it seems that there were initial claims about LK-99 being a possible room temperature superconductor, but further studies have not confirmed this. > Finished chain. ***** Step: Check reliable scientific sources and databases for any information on the superconducting properties of LK-99. Response: Based on the search results from the arXiv database, there is a publication titled "Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O" by Kapil Kumar, N. K. Karn, and V. P. S. Awana. The publication discusses the synthesis of polycrystalline samples of LK-99 and the investigation of its superconducting properties. However, the results of the study do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are still underway. There are also other publications related to superconductivity, but they do not specifically mention LK-99. One publication discusses the critical behavior of superfluid density in strongly disordered superconductors near the superconductor-insulator transition. Another publication focuses on the study of nodal superconductors in the presence of supercurrent. Based on these findings, it seems that there were initial claims about LK-99 being a possible room temperature superconductor, but further studies have not confirmed this. > Entering new AgentExecutor chain... > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[(Step(value='Search for information about LK-99 to determine if it is a superconductor.'), StepResponse(response='Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims.')), (Step(value='Check reliable scientific sources and databases for any information on the superconducting properties of LK-99.'), StepResponse(response='Based on the search results from the arXiv database, there is a publication titled "Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O" by Kapil Kumar, N. K. Karn, and V. P. S. Awana. The publication discusses the synthesis of polycrystalline samples of LK-99 and the investigation of its superconducting properties. However, the results of the study do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are still underway.\n\nThere are also other publications related to superconductivity, but they do not specifically mention LK-99. One publication discusses the critical behavior of superfluid density in strongly disordered superconductors near the superconductor-insulator transition. Another publication focuses on the study of nodal superconductors in the presence of supercurrent.\n\nBased on these findings, it seems that there were initial claims about LK-99 being a possible room temperature superconductor, but further studies have not confirmed this.'))] Current objective: value='Analyze the gathered information to determine if LK-99 is indeed a superconductor.' > Finished chain. Thought: Based on the previous steps, it seems that there were initial claims about LK-99 being a possible room temperature superconductor. However, further investigation and replication attempts have not confirmed this. The arXiv database search also revealed a publication discussing the synthesis of LK-99 and the investigation of its superconducting properties, but the results did not approve the appearance of bulk superconductivity at room temperature. Action: ``` { "action": "Final Answer", "action_input": "Based on the gathered information, it can be concluded that LK-99 is not a superconductor." } ``` > Finished chain. ***** Step: Analyze the gathered information to determine if LK-99 is indeed a superconductor. Response: Based on the gathered information, it can be concluded that LK-99 is not a superconductor. > Entering new AgentExecutor chain... > Entering new LLMChain chain... Prompt after formatting: System: Respond to the human as helpfully and accurately as possible. You have access to the following tools: duckduckgo_search: A wrapper around DuckDuckGo Search. Useful for when you need to answer questions about current events. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} arxiv: A wrapper around Arxiv.org Useful for when you need to answer questions about Physics, Mathematics, Computer Science, Quantitative Biology, Quantitative Finance, Statistics, Electrical Engineering, and Economics from scientific articles on arxiv.org. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} PubMed: A wrapper around PubMed. Useful for when you need to answer questions about medicine, health, and biomedical topics from biomedical literature, MEDLINE, life science journals, and online books. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query., args: {{'query': {{'title': 'Query', 'type': 'string'}}}} Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input). Valid "action" values: "Final Answer" or duckduckgo_search, arxiv, PubMed, Wikipedia Provide only ONE action per $JSON_BLOB, as shown: ``` { "action": $TOOL_NAME, "action_input": $INPUT } ``` Follow this format: Question: input question to answer Thought: consider previous and subsequent steps Action: ``` $JSON_BLOB ``` Observation: action result ... (repeat Thought/Action/Observation N times) Thought: I know what to respond Action: ``` { "action": "Final Answer", "action_input": "Final response to human" } ``` Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:. Thought: Human: Previous steps: steps=[(Step(value='Search for information about LK-99 to determine if it is a superconductor.'), StepResponse(response='Based on the search results, it seems that there were initial claims that LK-99, a compound of copper, lead, phosphorus, and oxygen, was working as a superconductor at room temperature and ambient pressure. However, further investigation and replication attempts have revealed that LK-99 is not a superconductor. There is an explanation provided by Illinois professor Prashant Jain regarding a confounding effect that helped unravel the mystery. Some scientists have expressed doubts about the LK-99 superconductor claims.')), (Step(value='Check reliable scientific sources and databases for any information on the superconducting properties of LK-99.'), StepResponse(response='Based on the search results from the arXiv database, there is a publication titled "Synthesis of possible room temperature superconductor LK-99:Pb$_9$Cu(PO$_4$)$_6$O" by Kapil Kumar, N. K. Karn, and V. P. S. Awana. The publication discusses the synthesis of polycrystalline samples of LK-99 and the investigation of its superconducting properties. However, the results of the study do not approve the appearance of bulk superconductivity at room temperature. Further studies with different heat treatments are still underway.\n\nThere are also other publications related to superconductivity, but they do not specifically mention LK-99. One publication discusses the critical behavior of superfluid density in strongly disordered superconductors near the superconductor-insulator transition. Another publication focuses on the study of nodal superconductors in the presence of supercurrent.\n\nBased on these findings, it seems that there were initial claims about LK-99 being a possible room temperature superconductor, but further studies have not confirmed this.')), (Step(value='Analyze the gathered information to determine if LK-99 is indeed a superconductor.'), StepResponse(response='Based on the gathered information, it can be concluded that LK-99 is not a superconductor.'))] Current objective: value="Given the above steps taken, respond to the user's original question. \n" > Finished chain. Action: ``` { "action": "Final Answer", "action_input": "Based on the gathered information, it can be concluded that LK-99 is not a superconductor." } ``` > Finished chain. ***** Step: Given the above steps taken, respond to the user's original question. Response: Based on the gathered information, it can be concluded that LK-99 is not a superconductor. > Finished chain.